Normal Computing was founded in the USA by former members of Google Brain and Google X who helped pioneer AI for the physical world, and developed the leading ML frameworks for Probabilistic and Quantum AI.

The infrastructure powering AI models was never designed with today’s scale, complexity, or energy demands in mind. Today's general-purpose architectures underutilize the physical potential of the hardware itself.

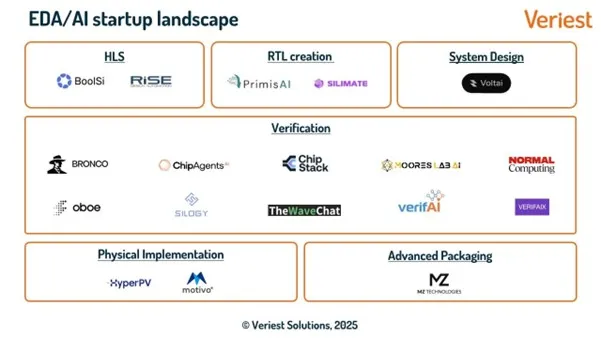

Exploring the limits of new and custom silicon, including those which optimize their own physics, requires the help of AI, better EDA software, and ultimately the realization of a virtuous cycle of self-improving AI hardware.

%2520(7).svg)

.webp)

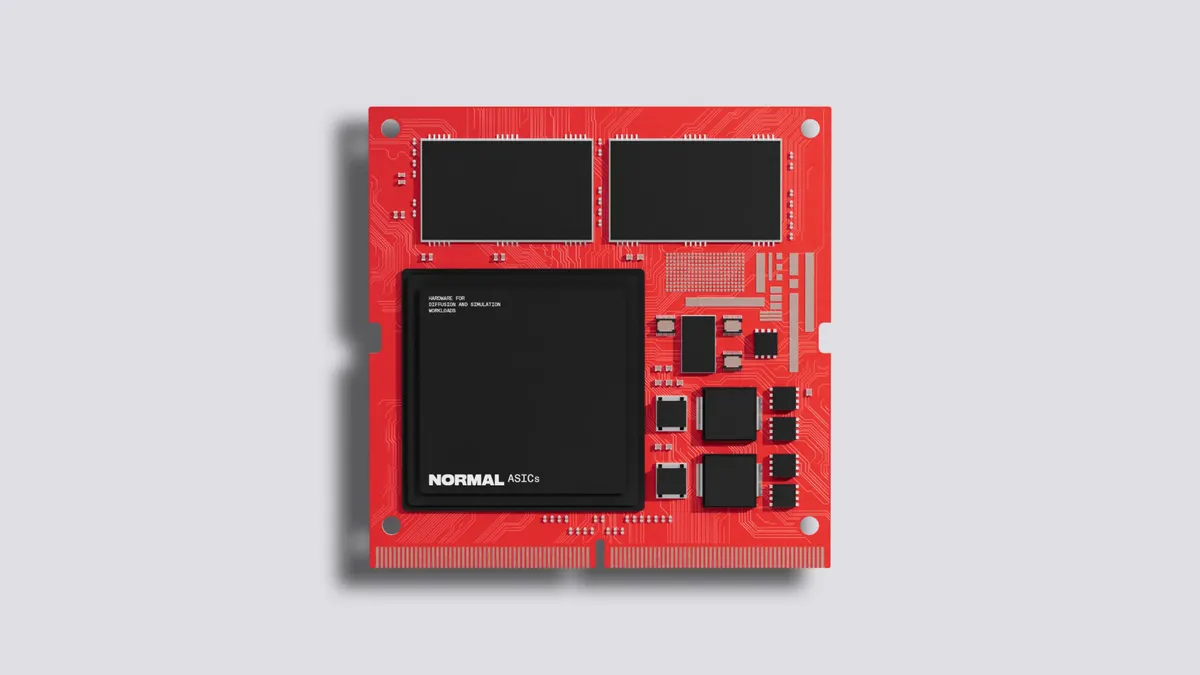

.webp)

.webp)